Real-Time Recyclable Waste Classifier

2024

Overview

This project delivers an AI-powered solution to a common but often overlooked environmental challenge — accurately sorting recyclable waste in real time. Using deep learning, object detection, and embedded systems, I developed a lightweight yet effective classifier capable of identifying multiple categories of recyclable materials from live camera feeds.The system is built for deployment on low-power devices like the Raspberry Pi, making it scalable for real-world applications in cafeterias, recycling facilities, and urban waste management systems. By combining dataset curation, transfer learning, and model optimization, the project bridges the gap between high-accuracy computer vision models and the hardware constraints of embedded platforms.

Beyond technical achievement, the project demonstrates the potential of edge AI to automate labor-intensive tasks, reduce contamination in recycling streams, and improve sustainability efforts — all while running efficiently in resource-limited environments.

Problem Statement

Waste mismanagement leads to recyclable materials such as plastics, glass, and metals ending up in landfills. Manual sorting is labor-intensive and prone to errors, especially in high-volume environments like restaurants and cafeterias.

The challenge was to design a real-time object detection model that:

Accurately classifies waste into multiple categories

Runs efficiently on a resource-constrained device like a Raspberry Pi

Handles real-world variations in lighting, background, and object orientation

Methodology

Dataset Selection: Chose the Recyclable and Household Waste Classification dataset (15,000 images across 18 categories) after evaluating multiple public datasets.

Data Cleaning & Annotation: Removed low-quality/ambiguous images and annotated objects with bounding boxes using LabelImg

Preprocessing: Resized and normalized images; applied data augmentation (flip, rotation, zoom, contrast adjustment).

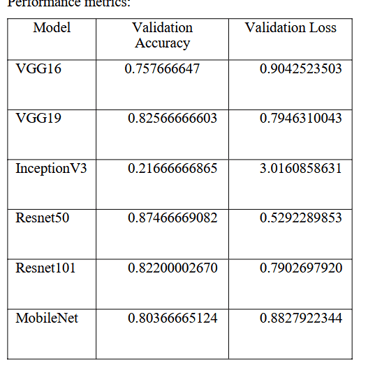

Model Evaluation: Tested multiple pre-trained CNNs (ResNet50, VGG19, MobileNet) with transfer learning to find the best accuracy–efficiency trade-off.

Final Model Selection: Selected SSD MobileNet V2 for object detection due to its lightweight design and suitability for embedded systems.

Optimization: Applied pruning and quantization; converted model to TensorFlow Lite for edge deployment.

Training: Configured TensorFlow Object Detection API with 30,000 training steps and batch size of 16.

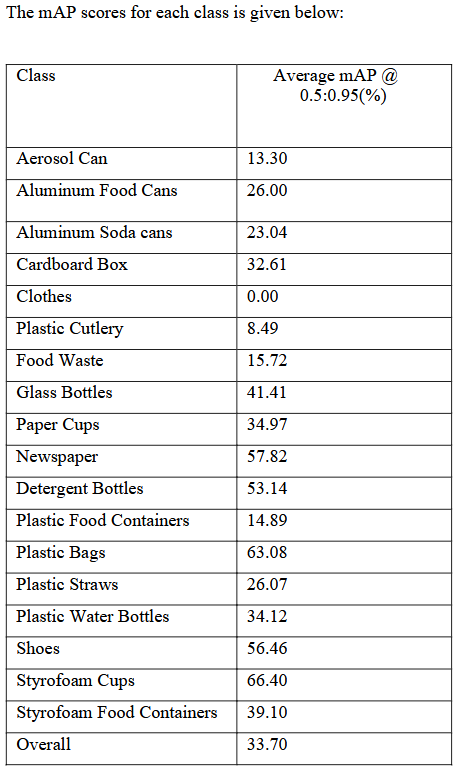

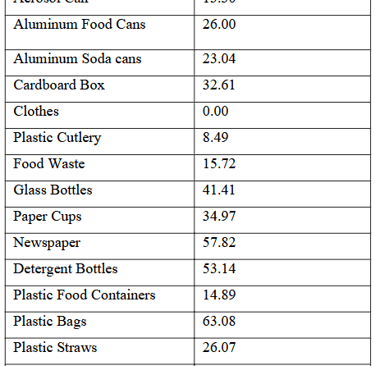

Testing & Evaluation: Measured performance using mAP @ 0.5:0.95; best-performing classes exceeded 60% mAP.

Deployment Trials: Successfully tested on laptop webcam; attempted Raspberry Pi deployment (camera hardware issue prevented final integration

Evaluated multiple datasets: TrashNet, TACO, and Kaggle Waste Dataset.

Selected the Recyclable and Household Waste Classification Dataset containing 15,000 labeled images across 18 categories 12000 of which are used for training and 3000 of which were used for validation. , split into:

"default": Studio-quality images

"real_world": Complex, natural background images

The dataset covers the following waste categories and items:

Plastic: water bottles, soda bottles, detergent bottles, shopping bags, food containers, disposable cutlery, straws

Paper and Cardboard: newspaper, cardboard boxes,

Glass: beverage bottles, cosmetic containers

Metal: aluminum soda cans, aluminum food cans, aerosol cans

Organic Waste: food waste (fruit peels, vegetable scraps)

Textiles: clothing, shoes

Cleaned dataset by removing low-quality or ambiguous images.

Annotated object locations using LabelImg, generating Pascal VOC XML files.

Dataset Curation & Preprocessing

Model Selection & Training

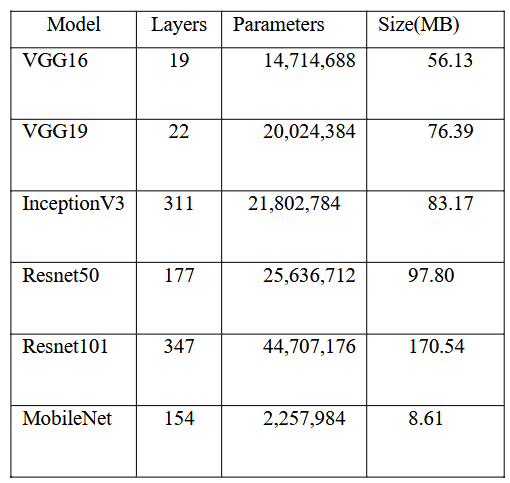

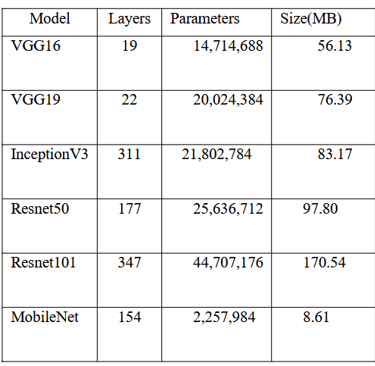

Tested multiple pre-trained convolutional neural networks (CNNs) with transfer learning. The model parameters are given below:

Used data augmentation (random flipping, rotation, zoom, contrast adjustment) to improve generalization.

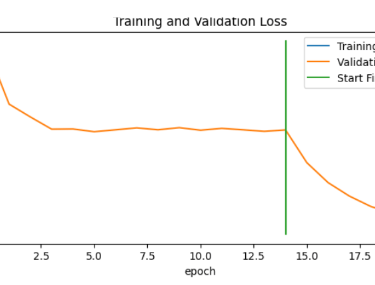

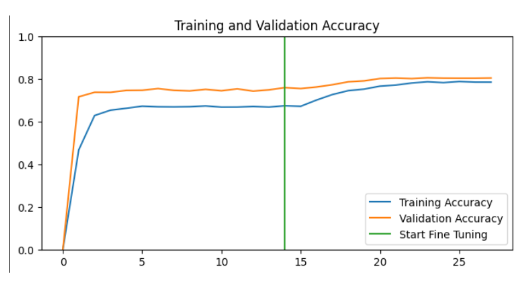

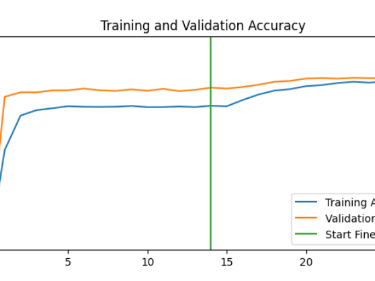

Imported the models from Keras with pre-trained ImageNet weights freezing the base model's layers to prevent them from being updated during initial training and then built a new model using the Functional API, adding a classification head on top of the base model.

Compiled the models with Adam optimizer and CategoricalCrossentropy loss function

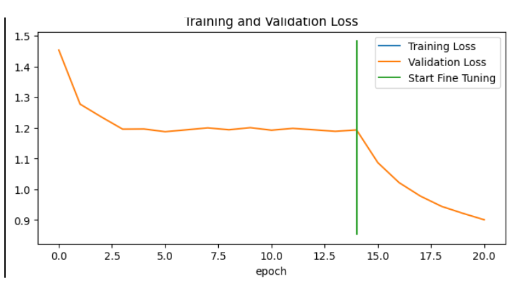

For each model, I have trained the base model for 15 epochs before unfreezing the last ~10 layers and using a further 5 epochs for fine tuning the model with a much lower learning rate

Chose SSD MobileNet V2 for object detection due to its balance of accuracy and computational efficiency. MobileNet[4] is a lightweight convolutional neural network designed for mobile and resource-constrained devices. It uses depthwise separable convolutions to reduce computational complexity and memory usage, maintaining accuracy across a variety of vision tasks.

Final Thoughts

Object Detection

For the actual object detection I’ve used Tensorflow Object Detection API. First, I installed the TensorFlow Object Detection API in this Google Colab instance. This requires cloning the TensorFlow models repository and running installation commands.

After importing the datatset from google drive, I created a labelmap for the detector and convert the images into a data file format called TFRecords, which are used by TensorFlow for training. I used Python scripts to automatically convert the data into TFRecord format. Before running them, I defined a labelmap for my classes by creating a "labelmap.txt" file that contains a list of classes.

I downloaded and run thedata conversion scripts from the GitHub repository which creates TFRecord files for the train and validation datasets, as well as a labelmap.pbtxt file which contains the labelmap in a different format. I stored the locations of the TFRecord and labelmap files as variables so we can reference them later in this Colab session

Then I set up the model and training configuration specifying which pretrained TensorFlow model I want to use from the TensorFlow 2 Object Detection Model Zoo.

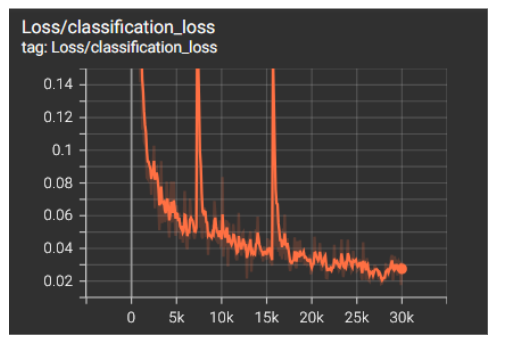

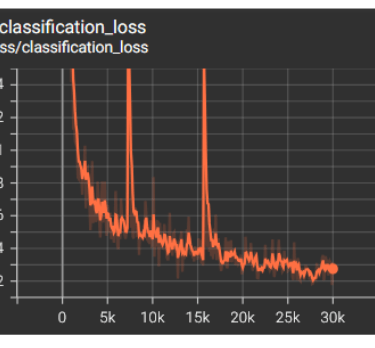

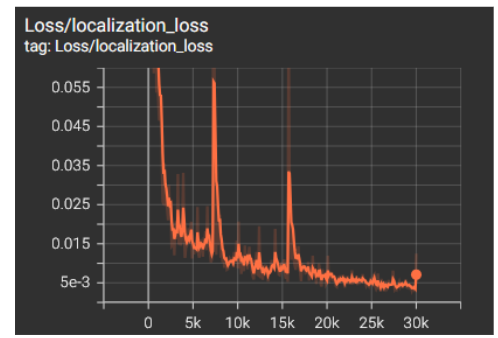

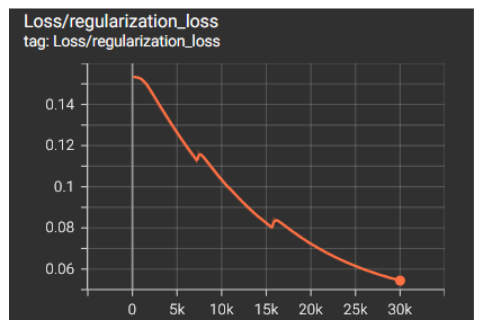

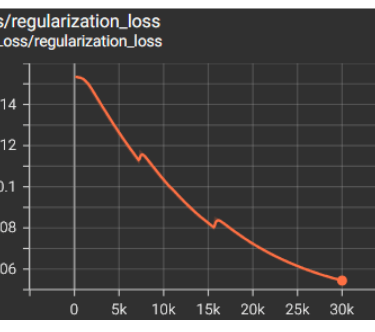

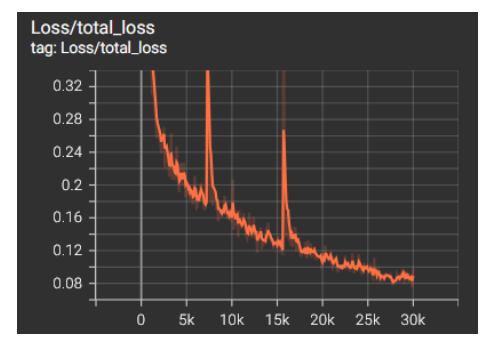

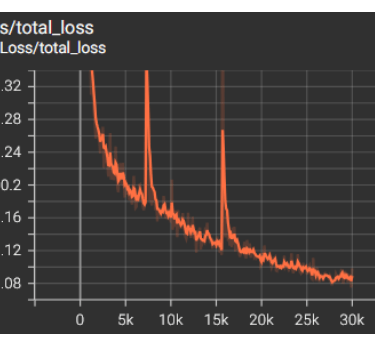

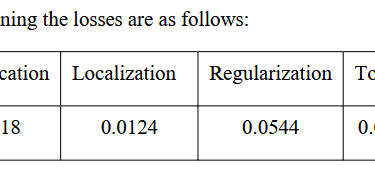

I modified the config file with some high-level training parameters. Configured training with:

30,000 steps

Batch size of 16 (optimized for Google Colab GPU memory)

Monitored the loss function using Tensorboard.

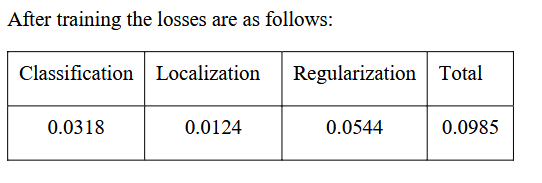

Performance Evaluation

After training I used the TFLiteConverter module to convert the model to .tflite FlatBuffer format. Using the test image dataset and labelmap, I ran the model on each image and displayed the result. I also saved detection results as text files so I could use them to calculate model mAP score.

I used the mAP calculator tool[7] to determine my model's mAP score. First, I cloned the repository and remove its existing example data. I copied the images and annotation data from the test folder to the appropriate folders inside the cloned repository. These will be used as the "ground truth data" that my model's detection results will be compared to. The calculator tool expects annotation data in a format that's different from the Pascal VOC .xml file format I’m using convert_gt_xml.py, for converting to the expected .txt format.

One popular style for reporting mAP is the COCO metric for mAP @ 0.50:0.95. mAP is calculated at several IoU thresholds between 0.50 and 0.95, and then the result from each threshold is averaged to get a final mAP score

An mAP of around 50 percent would be desirable. However my model’s is lower than that. This could be improved further by adding more images in the training dataset or training the model with higher number of steps.

Embedded Deployment

To see if the tflite model runs, I first run the object detection on my laptop using a webcam. I created a python environment using anaconda prompt. I downloaded the detection script from the GitHub repository

I then moved on to running it on Raspberry Pi model B connected to a Camera V2 module. Even though I was successful in setting up the Pi and created a python environment, it refused to recognize the camera module as an interface.

Project Presentation

Future Improvements

Expand dataset with more diverse, real-world waste images.

Increase training iterations and fine-tune hyperparameters.

Explore model fusion (combining ResNet50 and MobileNet outputs) for better accuracy.

Fully integrate on a Raspberry Pi with functioning camera hardware for live demonstrations.

This project showcases my ability to take a problem from real-world observation through data collection, model development, optimization, and attempted deployment on an embedded platform. It reflects not only my technical skills in computer vision, transfer learning, and edge AI deployment, but also my ability to troubleshoot challenges and adapt when hardware limitations arise.

While the hardware setback prevented full Raspberry Pi integration, the functional prototype demonstrates the feasibility of real-time recyclable waste classification in practical environments. With additional dataset expansion and hardware testing, this system could be readily deployed in cafeterias, recycling centers, and other high-throughput waste management facilities.

Connect

Explore my projects and coursework for insights.

afiah468@gmail.com

+970-689-1697

© 2025. All rights reserved.