Embedded Systems and Machine Learning

Course | Master of Science in Electrical Engineering

Semester: Fall 2024

Instructor: Dr. Sudeep Pasricha

Course Overview

Machine learning is rapidly transforming embedded systems—from smartphones and IoT devices to autonomous vehicles. This course focused on designing efficient and optimized deep learning models for embedded platforms. We explored cutting-edge research and implementation techniques that bridge the gap between ML theory and real-world, resource-constrained environments.

Key areas of focus:

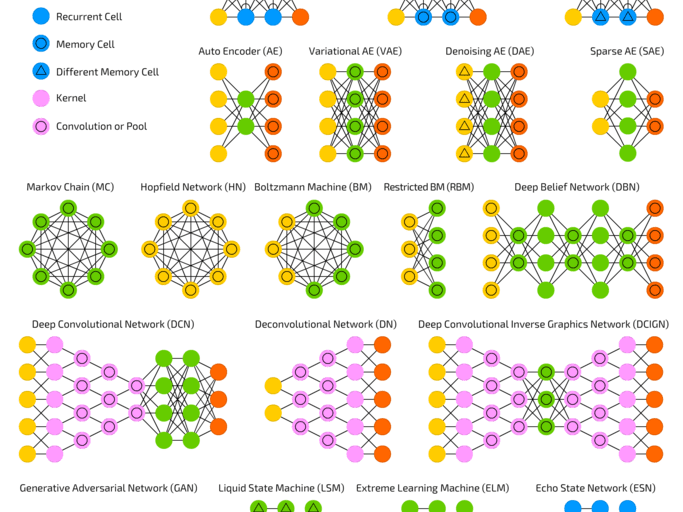

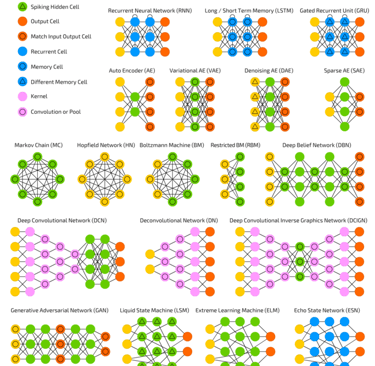

Deep learning fundamentals and architectures (DNNs, CNNs, RNNs)

Software optimization for low-power and memory-limited platforms

Hardware acceleration using specialized architectures

Hardware-software co-design techniques

Emerging paradigms: processing-in-memory, photonics, memristors

Hands-on projects using Python, simulation tools

Deep Learning Architectures

Fundamentals of Deep Neural Networks (DNNs) and Convolutional Neural Networks (CNNs)

Training pipelines, forward/backpropagation

Techniques to mitigate overfitting: dropout, regularization

Software Optimization Techniques

Model compression: quantization, pruning, and weight sharing

Memory-efficient inference with reduced precision arithmetic

Latency, power, and memory profiling tools and methods

Hardware Acceleration for ML

Efficient deployment using GPUs, TPUs, FPGAs, and custom ASICs

Hardware-aware model tuning and co-design

Real-time ML inference and throughput optimization

Sequence and Time-Series Processing

Recurrent models: RNNs, LSTMs, GRUs

Applications in speech recognition, sensor analytics, and control systems

Optimization of sequential models for embedded platforms

Unsupervised Learning Methods

Clustering techniques (e.g., K-means, DBSCAN)

Dimensionality reduction (e.g., PCA, t-SNE)

Pattern discovery and feature learning without labels

Anomaly Detection and Embedded Security

Real-time outlier detection for edge devices

Machine learning for intrusion and fault detection

Building trust and robustness into ML-powered systems

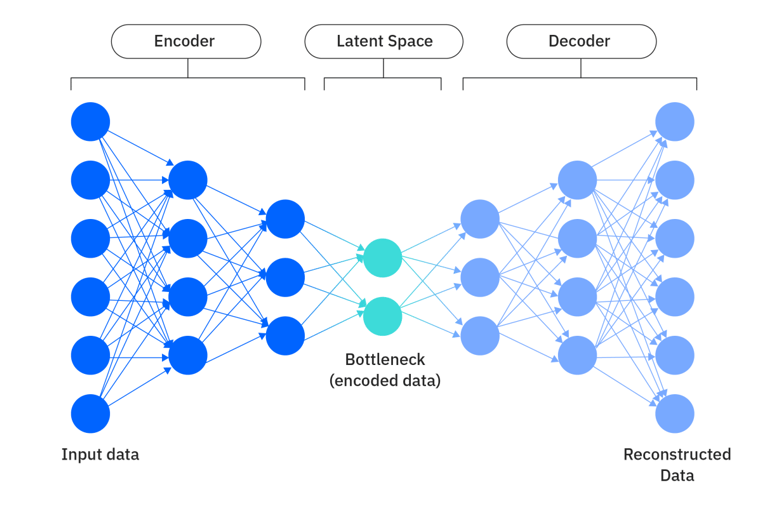

Generative Modeling

Autoencoders and Variational Autoencoders (VAEs)

Generative Adversarial Networks (GANs) for synthetic data

Applications in data augmentation and model compression

Advanced Embedded ML Techniques

Lightweight architectures: MobileNet, SqueezeNet, TinyML

Neural Architecture Search (NAS) and knowledge distillation

Balancing trade-offs: accuracy, speed, and hardware efficiency

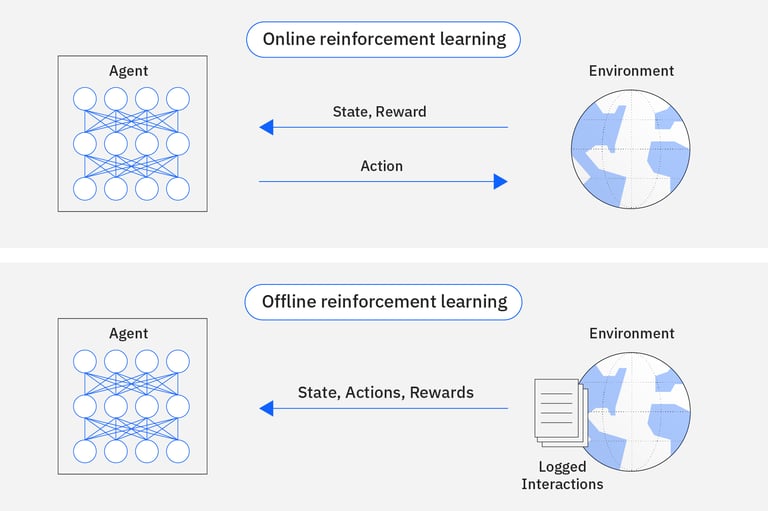

Reinforcement Learning

Policy-based and value-based methods (e.g., Q-learning)

Real-world applications in embedded control and automation

Designing efficient RL agents under constrained resources

Emerging Computing Paradigms for ML

Processing-in-memory and near-memory computing

Memristor-based learning architectures

Photonic computing and neuromorphic systems

Future directions in machine learning hardware

Technical Scope

Project : Real-Time Recyclable Waste Classifier

The goal of this project was to develop a real-time machine learning system capable of classifying recyclable and non-recyclable waste using image data. By leveraging transfer learning and lightweight object detection models like SSD MobileNet V2, the system was designed for deployment on resource-constrained embedded platforms such as the Raspberry Pi. The broader objective was to automate waste sorting processes in environments like dining facilities, reducing manual labor and improving recycling efficiency.

Final Reflection

This course significantly strengthened my ability to design and implement resource-efficient machine learning solutions by bridging the gap between high-level ML theory and low-level hardware-aware optimization. Through hands-on labs, technical readings, and the final project, I developed a deeper understanding of how to adapt and deploy ML models within the strict performance and energy constraints of embedded systems. I learned to think critically about the full stack—from algorithm selection and data handling to model compression techniques and hardware acceleration strategies. Importantly, the course also introduced me to cutting-edge research in emerging compute paradigms such as processing-in-memory, neuromorphic computing, and photonics, which broadened my perspective on where embedded ML is headed.

Connect

Explore my projects and coursework for insights.

afiah468@gmail.com

+970-689-1697

© 2025. All rights reserved.